It's hard to imagine a world without computers. They're everywhere, from the smartphones in our pockets to the supercomputers powering the internet.

But how did we get here?

Let's journey back to the early days of computing and uncover the story of the first computer.

The Seeds of an Idea

The concept of a machine that could perform complex calculations automatically is not a recent one. The ancient Greeks and Chinese had devices like the abacus, which were early forms of mechanical calculators. However, the idea of a programmable machine, one that could be instructed to perform different tasks, emerged in the 19th century.

One of the key figures in this early history was Charles Babbage, a 19th-century English mathematician and inventor. He envisioned a machine called the Analytical Engine, which was designed to be programmable and capable of performing a wide range of calculations. While his Analytical Engine was never fully built during his lifetime, his ideas laid the groundwork for future developments.

The Birth of the Modern Computer

The real breakthrough came in the mid-20th century, during World War II. The war created a pressing need for rapid calculations, especially in fields like cryptography and ballistics. This urgency led to the development of the first electronic digital computers.

One of the most famous early computers was the Colossus, built by British codebreakers to help decipher German messages.

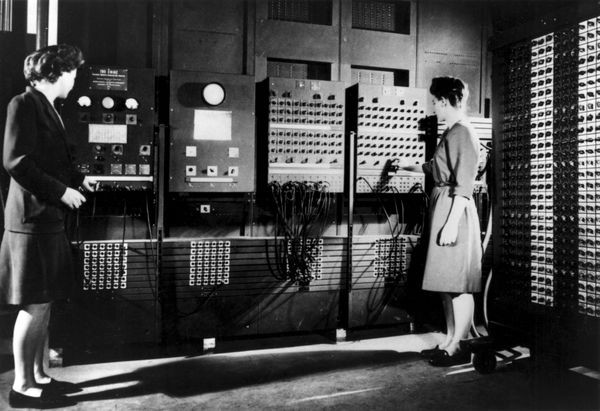

Another significant development was the ENIAC (Electronic Numerical Integrator and Computer), a massive machine developed by the U.S. Army. While the Colossus and ENIAC were amazing, they were still limited in their capabilities and flexibility.

The Transistor Revolution

A major turning point in computer history came with the invention of the transistor in the 1940s. Transistors replaced bulky vacuum tubes, making computers smaller, faster, and more reliable. This technological advancement led the way for the development of the first generation of modern computers.

How Computers Work

To understand how computers function, we need to know their basic components and the underlying principles.

-Hardware: This refers to the physical components of a computer, such as the processor, memory, storage devices, and input/output devices.

-Processor (CPU): Often called the "brain" of the computer, the CPU executes instructions and performs calculations.

-Memory (RAM): This is where the computer stores data and instructions that are currently being used.

-Storage Devices: These devices, like hard drives and solid-state drives, store data permanently.

-Input/Output Devices: These devices allow us to interact with the computer, such as keyboards, mice, monitors, and printers.

-Software: This is the intangible part of a computer, the set of instructions that tell the hardware what to do.

-Operating System: This software manages the hardware and provides a user interface. Examples include Windows, macOS, and Linux.

-Applications: These are programs designed to perform specific tasks, such as word processing, web browsing, and gaming.

How Computers Process Information

At the heart of a computer's operation is the binary number system. This system uses only two digits, 0 and 1, to represent information. Each digit is called a bit. A group of eight bits is called a byte.

When you turn on a computer, it starts executing instructions stored in its memory. These instructions are broken down into simple steps that the CPU can understand. The CPU fetches each instruction, decodes it, executes it, and then moves on to the next one. This process is repeated millions of times per second.

The Future of Computing

The journey of computing is far from over. We're constantly pushing the boundaries of technology, developing faster, more powerful, and more efficient computers. From artificial intelligence to quantum computing, the future holds exciting possibilities.

As we continue to innovate, it's important to remember the humble beginnings of the computer. The visionaries who laid the foundation for this incredible technology have shaped the world we live in today.