In the rapidly evolving world of modern computing, Graphics Processing Units (GPUs) have emerged as a revolutionary technology that goes far beyond their original purpose of rendering graphics.

These powerful computational engines have become essential in everything from gaming and artificial intelligence to scientific research and cryptocurrency mining.

But what exactly makes GPUs so special, and why have they become such a critical component of contemporary technology?

The Origins: From Graphics to General-Purpose Computing

GPUs were initially designed with a singular purpose: to accelerate graphics rendering for video games and professional visualization. Traditional Central Processing Units (CPUs) struggled to handle the complex mathematical calculations required to generate smooth, realistic graphics in real-time.

Graphics cards were developed to offload these intensive graphical computations, allowing for more immersive and visually stunning digital experiences.

Parallel Processing: The Core Innovation

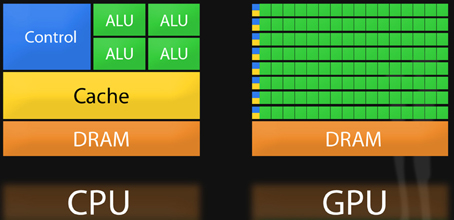

The fundamental technology that sets GPUs apart is their unique architectural design focused on parallel processing. Unlike CPUs, which are optimized for sequential processing and can handle a few complex tasks quickly, GPUs are engineered to process thousands of simpler tasks simultaneously. This architectural difference is key to understanding their incredible computational power.

How Parallel Processing Works

-A typical CPU might have 4-8 cores capable of handling complex instructions rapidly.

-In contrast, a modern GPU can have thousands of smaller, more specialized processing units.

-These units work in parallel, breaking down complex problems into smaller, manageable chunks.

-Each processing unit can work on a different part of a problem concurrently, dramatically reducing overall computation time.

The Expansion of GPU Applications

Artificial Intelligence and Machine Learning

The rise of AI and machine learning has catapulted GPUs from a niche graphics technology to a fundamental component of computational research and development.

Neural networks, which form the backbone of modern AI systems, require massive amounts of parallel computations – exactly the type of workload GPUs excel at handling.

Consider deep learning models that train on massive datasets:

-Training an advanced AI model can require processing billions of mathematical operations.

-GPUs can perform these calculations exponentially faster than traditional CPUs.

-This speed enables researchers to develop more complex and sophisticated AI systems in significantly less time.

Scientific Research and Simulation

GPUs have become indispensable in fields requiring complex computational simulations:

-Climate modeling

-Molecular dynamics

-Astrophysical research

-Quantum mechanics simulations

These domains involve processing enormous datasets and performing intricate mathematical calculations that benefit immensely from the parallel processing capabilities of GPUs.

The Technical Architecture of Modern GPUs

Memory and Computation Design

Features of Modern GPU

-High-bandwidth memory systems

-Specialized computational cores

-Advanced cooling mechanisms

-Dedicated data transfer infrastructures

A typical GPU might include:

-Thousands of smaller cores optimized for specific types of calculations

-Dedicated high-speed memory (VRAM) for rapid data access

-Specialized arithmetic logic units designed for floating-point calculations

-Complex interconnect systems allowing rapid communication between processing units

CUDA and OpenCL: Programming GPUs

To harness GPU power, specialized programming frameworks like NVIDIA's CUDA and the open-standard OpenCL have been developed. These frameworks allow developers to write code that can effectively utilize the parallel processing capabilities of GPUs.

Why GPUs Are Increasingly Essential

1. Computational Efficiency

GPUs offer unprecedented computational efficiency for specific types of tasks. They can perform certain calculations hundreds of times faster than traditional CPUs, making them crucial for:

-Real-time graphics rendering

-Complex scientific simulations

-Machine learning model training

-Cryptocurrency mining

-Video encoding and decoding

2. Energy Efficiency

Despite their immense computational power, modern GPUs are becoming increasingly energy-efficient. Advanced manufacturing processes and architectural innovations mean that GPUs can deliver more computational power while consuming relatively less electricity.

3. Versatility

The versatility of GPUs has expanded dramatically. What began as a technology for rendering video game graphics has transformed into a critical component across multiple industries:

-Healthcare (medical imaging)

-Financial modeling

-Autonomous vehicle development

-Robotics

-Virtual and augmented reality

Challenges and Future Developments

While GPUs have made incredible strides, challenges remain:

-Increasing complexity of programming parallel systems

-Managing heat generation

-Balancing cost with performance

-Developing more specialized architectures for specific computational needs

The future of GPU technology looks incredibly promising, with ongoing research focusing on:

-More energy-efficient designs

-Increased computational density

-Better integration with AI and machine learning frameworks

-Quantum computing interfaces

Graphics Processing Units represent more than just a technological innovation; they symbolize a fundamental shift in computational thinking. By embracing parallel processing and specialized computational architectures, GPUs have transformed from niche graphics accelerators to foundational technologies driving innovation across multiple domains.

As our computational challenges become more complex and data-intensive, GPUs will undoubtedly continue to play a pivotal role in pushing the boundaries of what's possible in computing, research, and technological innovation.